From 14 minutes to 3 minutes per wound without changing the workflow: edge-first AI that clinicians actually use

Challenges

Frontline nurses were measuring pressure injuries and post-surgical wounds with paper rulers and forms. Measurements varied by clinician and lighting. At the end of the shift, data was re-keyed into the EHR. Throughput suffered; so did auditability. The mandate: embed AI into the existing mobile app without adding any steps.

How we helped

We identified three root causes and operated three fixes. First, AI vision model trained on one of the largest labeled wound image sets (>12M datapoints) to detect edges and calibrate scale from a standardized marker. Second, on-device inference via TensorFlow Lite - <200 ms on iOS/Android - so it works offline at bedside and keeps attention on the patient, not a spinner. And FHIR-native connector that writes images, dimensions, and notes straight into Epic/Cerner/MEDITECH - no double entry, HIPAA/GDPR intact.

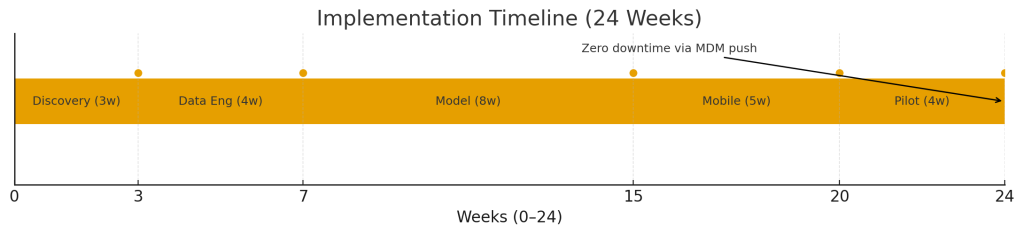

Delivery timeline

- Weeks 0–3: Discovery + clinical shadowing; baseline variance study

- Weeks 4–7: Data engineering—augment 2M legacy images; synthetic lighting variants

- Weeks 8–15: Model dev to IoU 0.89, TFLite quantization

- Weeks 16–20: Mobile integration (React Native SDK + native camera plugins)

- Weeks 21–24: Pilot & validation; MDM push upgrade with zero production downtime

Technologies we used

Mobile

Backend

Compliance

Key takeaways

In our delivery practice, the work moves fastest when we treat the handset as production: set edge budgets: latency, battery, offline before tuning anything. Map every field to FHIR up front so integration turns into configuration, and validate against a physical reference to earn clinical trust. Ship as an SDK and roll out via MDM so you can pilot, stage, and roll back- laying the rails for whatever you build next.