After spending hundreds of hours in the trenches with clinicians to understand the “Universe B” reality of care, it’s clear to us that a massive disconnect has formed: one that can only be bridged by a resilient clinical AI framework.

In the air-conditioned boardrooms of Silicon Valley, the future of healthcare looks like a “Formula 1” race car. Pitch decks are filled with sleek screenshots of autonomous clinical intelligence and promises of GPT-5-powered breakthroughs that can predict a patient’s heart failure ten years before the first symptom.

But as a CIO, you know that the real future looks a little different.

The real future is a Tuesday morning at a community clinic where the WiFi collapses under the “emotional weight” of a second browser tab. It’s a world where a doctor is trying to save a life while simultaneously fighting a printer that just fainted and a fax machine spitting out 14 pages of illegible handwriting.

This is the Universe A vs. Universe B gap. And in 2026, this gap has created a full-blown commodity AI crisis.

Key insights

To move from innovation on paper to operational excellence, healthcare CIOs must shift focus from experimental “toys” to resilient, integrated “tools”. Below are the strategic insights for navigating the current commodity AI crisis:

- Strategic shift: Transition from Experimental AI (toys) to Operational AI (tools)

- Real-world utility: Improve legacy systems rather than adding AI-layered engines that fail in real-world clinic environments

- Workflow integration: Avoid “thin wrappers” that increase click fatigue and prioritize agents with bi-directional EHR write-back

- Clinical trust: Ensure safety through verifiable citation audits and longitudinal patient memory

- Operational resilience: Build for resilience to turn stagnant pilots into scalable, high-impact clinical assets

The “PowerPoint intelligence” trap: when you make a Tesla for a donkey cart

Most of the AI solutions landing on your desk today are what we – tech leaders – call “thin wrappers.”

Think of a thin wrapper as a digital sticker. It’s a pretty user interface (the “look”) stretched over a general-purpose AI brain (like the one you prompt to write birthday poems). It doesn’t actually “know” your hospital; it’s just a middleman passing messages back and forth.

For a CIO, buying a thin wrapper is the quickest way to accidentally sabotage your staff.

Why? Because a wrapper expects Universe A:

- It wants the doctor to copy-paste data (adding more clicks to an already exhausted day)

- It lives in a separate browser tab (the “second tab” that crashes the system)

- It has no “memory” of the patient’s history unless you manually feed it

In short, it’s a Formula 1 engine being bolted onto a donkey cart. It’s too heavy, it’s too expensive, and it doesn’t have the keys to the ignition.

The CIO’s new mandate: resilience over speed

In this era of hype, it’s easy to get distracted by “moonshots.” But your clinicians don’t need another moonshot.

They need software that doesn’t faint.

They need systems that can decipher Dr. Johnson’s 2019 handwriting and handle a patient showing up with their records on a CD-ROM.

The true value of a clinical AI framework isn’t in about the fastest AI or the one with the most famous LLM.

It’s in finding the most resilient integration. It’s about choosing integrated clinical agents—tools that are “hired” into your system, have their own keys to the EHR, and are built to survive the beautiful, messy, and often frustrating chaos of a real clinic day.

A sustainable healthcare growth strategy in 2026 relies on software resilience rather than speed. This approach ensures that clinical tools do not fail during critical patient care moments.

Navigating the clinical AI framework architectural spectrum

To separate the “innovation on PowerPoint presentation” from real utility, your clinical AI framework must distinguish between two very different animals.

One is the “thin wrapper”: the disconnected prototype

Think of this as a “digital sticker.” It’s a sleek UI that simply passes your patient data to a public brain like GPT-4. It has no medical logic of its own. In the chaotic reality of “Universe B,” the wrapper is a burden.

It demands a second browser tab, forces doctors into a “copy-paste” loop, and has zero memory of the patient’s history. It’s an unintegrated point solution that forgets everything the moment you hit refresh.

The other is the integrated clinical agent: your digital employee

This is a “workflow-aware” system. Instead of waiting for you to feed it, it uses RAG (Retrieval-Augmented Generation) to “read” the EHR and bi-directional APIs to “write” back.

It doesn’t just talk; it acts.

It lives inside the EHR via SMART on FHIR, acting as a buffer that understands a patient’s five-year longitudinal history without being asked.

Why clinical infrastructure wins over intelligence

The “AI-washing” crisis thrives on the collision between two worlds: Universe A, where a sales deck promises a model that predicts disease a decade in advance, and Universe B, where a physician is squinting at a blurry, handwritten fax because the clinic WiFi just died.

This is the ultimate reality check for any healthcare leader.

Without a robust clinical AI framework to bridge this gap, you aren’t buying a revolutionary tool; you’re buying an expensive interruption that can’t survive a rainy Tuesday.

Real clinical value isn’t measured by how fast an AI thinks in a lab, but by how well it handles the messy, legacy infrastructure of a real-world hospital.

Our takeaway: In a real clinic, AI is only as good as the data it can actually reach and the infrastructure it doesn’t break. If your AI can’t handle the “handwritten fax” reality of Universe B, it isn’t an innovation—it’s an interruption.

The CIO checklist: 10 questions to separate the “skin” from the “brain”

To move your organization from “innovation theater” to operational excellence, your clinical AI framework needs teeth.

When a vendor starts pitching the wonders of Universe A, use these ten questions to pull the conversation back down to the linoleum floor of Universe B.

Deep integration & workflow resilience

This is about survival. If the tool adds a single second of “click fatigue,” it will be abandoned.

1. The tab test: Does this solution require a separate login or browser tab, or is it natively embedded in our EHR?

If a doctor has to “tab-hop” to find an answer, the system is already failing the workflow.

2. Write-back capabilities: Can the agent update structured fields like orders, problem lists, or vitals?

A “thin wrapper” just writes a story for you to copy; an integrated agent actually does the work.

3. Low-bandwidth performance: How does the architecture handle spotty clinic WiFi?

If the system lags or the page refreshes, does the AI lose its “train of thought,” or is there a resilient local cache?

Data provenance & clinical logic

In medicine, a “guess” is a liability. You need to know exactly why the AI said what it said.

4. The citation audit: Can the AI show the “receipts”?

You shouldn’t have to trust the AI; you should be able to click a link that takes you to the specific lab result or 2019 note it used to form its conclusion.

5. Unstructured data ingestion: Can it handle the “Fax & CD-ROM” reality?

A high-speed engine is useless if it can’t ingest the handwritten PDF or legacy imaging report that holds the key to the patient’s history.

6. Longitudinal memory: Does the agent “remember” the patient from their 2021 visit?

A wrapper sees every prompt as a first date; an agent understands the five-year story of the human being in front of you.

Governance, security, & TCO

This is about protecting the hospital’s future and its bottom line.

7. Model agnosticism: What happens if the underlying “brain” (like OpenAI) changes its pricing or terms? Can the vendor swap models without breaking our entire integration?

8. The hallucination gate: What specific clinical “logic gates” are in place?

If the AI suggests a dosage that conflicts with hospital guidelines, what—exactly—stops that note from being signed?

9. Auditability: Is there a “logs-of-reasoning” trail?

For every action the agent takes, your legal and risk teams must be able to see the step-by-step logic it followed.

10. Scalability pricing: Does the cost scale by “tokens” (which are as unpredictable as the weather) or by clinical outcome and user?

You need a budget that survives Universe B.

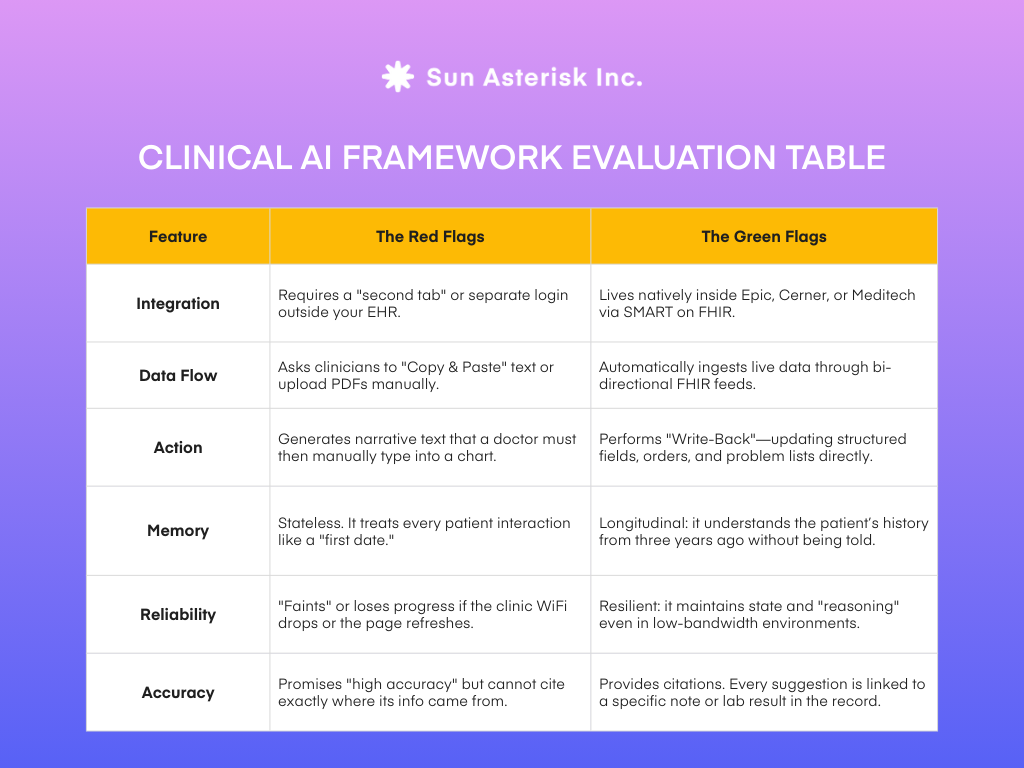

Evaluate red and green flags with this tactical guide

To bring this clinical AI framework to life, you need a way to quickly distinguish between a vendor selling a polished dream and one offering a practical tool.

If you’re sitting in a demo and it feels too good to be true, it’s likely a “Universe A” solution.

Use this comparison example to spot the red flags of a thin wrapper and the green flags of a true integrated agent.

Our final conclusion

Real innovation isn’t about swapping your healthcare infrastructure for a flashy AI software that your team isn’t trained to use and your infrastructure can’t support.

True progress is building a tool that makes those inevitable legacy systems work faster, smarter, and more reliably.

👉 Take the next step

If you have a successful pilot gathering dust, let our team help you transform that experiment into a scalable, operational tool for your healthcare organization.