Will AI replace programmers? A quick search turns up ~10.6 million results – a pretty good proxy for how many developers are stress-checking the same question at 2 a.m.

The volume tells a story: this isn’t just a panel debate; it’s a lived concern inside teams, Slack channels, and sprint reviews.

If you have programmer teams, check on them. The existential loop may be loud this year: LLMs can scaffold services, write tests, and pass linters at a pace no human touches. Developers are “vibe coding” with agents; tech leaders like you are watching AI budgets like hawks.

And yet, while everyone seems busy with AI, “fast code” isn’t the same as valuable software.

Will AI replace programmers? Short answer: not replaced, but reframed.

The job shifts from operating a keyboard to orchestrating an AI teammate with business context, guardrails, and proof.

The current state of AI & engineering: use, trust, skills, money

Developers have already made AI a daily habit: 84% say they use or plan to use AI tools, and 51% of professional devs report daily use. Adoption is here, even as teams still calibrate when to trust outputs.

Under controlled tasks, AI assistance moves the needle: GitHub reports ~55% faster completion and up to 50% faster time-to-merge when changes flow through proper gates. (In the wild, gains show up when the team pairs speed with verification, not vibes.)

Organizations meanwhile, are scrambling for talent that can actually wield these tools. From Pluralsight’s 2025 data: 95% of companies now check for AI skills in hiring; 70% call them “mandatory” or “highly preferred.”

And here’s the kicker: two-thirds have abandoned AI projects for lack of in-house skills. That’s not a tooling gap; that’s an enablement gap.

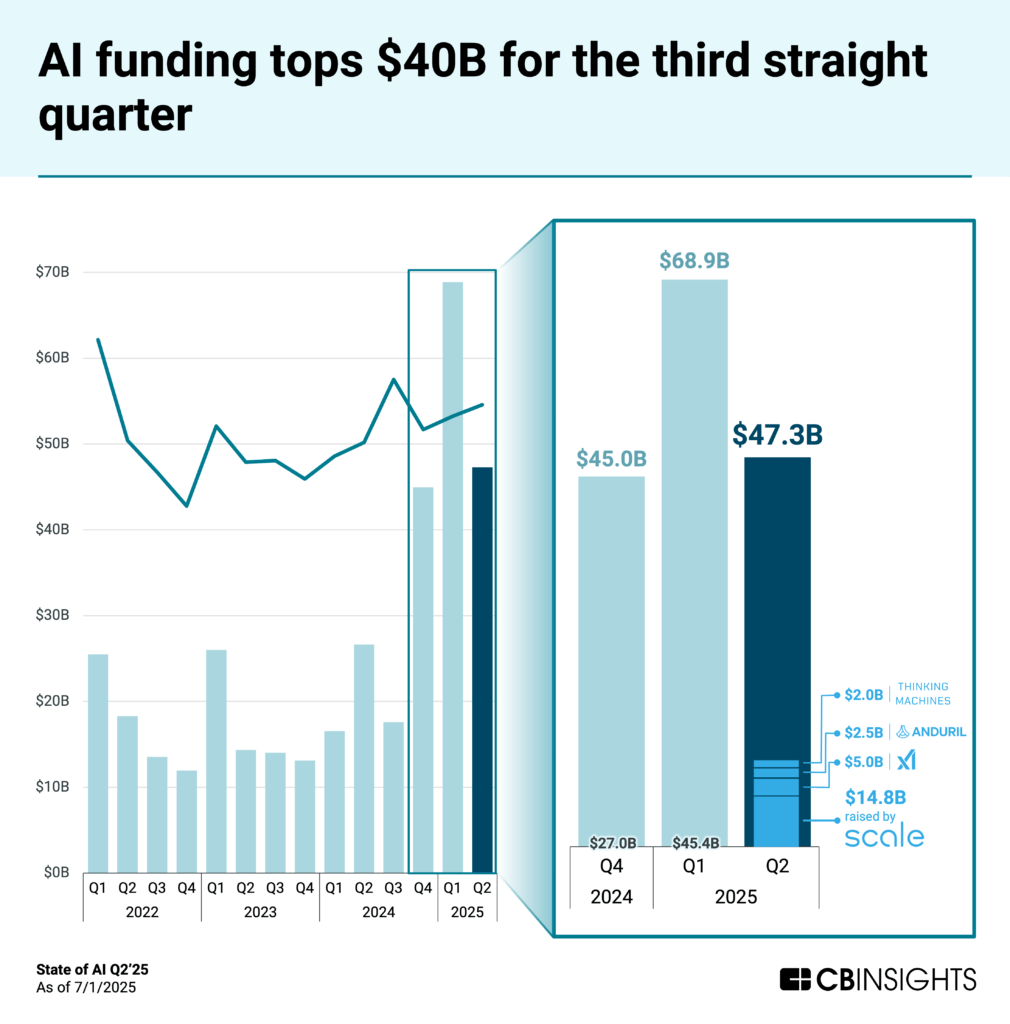

Zooming out, the capital is not slowing down.

CB Insights’ Q2’25 read shows H1 2025 AI funding already surpassed all of 2024. This is a signal that the tooling and platform surface will keep shifting under our feet. Your operating model has to mature; the wave isn’t receding.

And workers feel both sides of it. 52% of U.S. workers say they’re worried about AI’s future impact at work – so leaders need to make enterprise AI adoption safe, auditable, and clearly useful, not just new.

AI can code. Can it know why?

Ask a model to “build an app,” and you’ll get plausible code that compiles.

Now, ask it to defend a requirement, anticipate failure modes, or push back on a brittle data contract – and you’ll see the boundary.

The research IBM highlights from MIT CSAIL is blunt about that edge: today’s systems do well on small, self-contained snippets, but they struggle with long-horizon code planning. That’s the part where you reason about trade-offs (performance, memory, quality), how local decisions ripple through a codebase, and how to collaborate your way to the right design.

Benchmarks often miss this because they test toy tasks, not the testing, maintenance, and human-in-the-loop work that real software demands. Models also lack persistent project state and falter in low-resource languages or specialized internal libraries—exactly where many enterprises live.

At the same time, usage is not theoretical.

IBM reports that in 2025, 82% of developers use AI coding tools weekly or more, 59% rely on three or more assistants, and 78% report clear productivity gains.

Those wins are real but they’re mostly micro-task wins. The macro win still depends on planning, context, and verification that the business cares about.

Here’s our hard-earned truth: AI becomes a force multiplier the minute you anchor it to why (user, constraint, success condition) and run its output through the same gates your team already trusts—prompt → PR behind tests and reviews, not prompt → prod.

That’s how you convert snippet-level speed into system-level value.

Will AI replace programmers? The real role shift

AI isn’t replacing programmers. But, it’s exposing who can run the interface between intent, AI, and safety. The best engineers in 2025 don’t memorize more syntax; they own the interface between intent, AI, and safety.

And yes, the speed is real: developers are adopting assistants at scale and report daily use; controlled studies show ~55% faster task completion when their work flows through proper engineering gates.

But raw generation isn’t software. The hard parts are still long-horizon code planning – the reasoning across modules, anticipating the global consequences of local decisions, and collaborating to a design that holds under load.

That’s where current systems stumble, and why engineers must anchor the why, constrain the solution, and verify the result.

What if you skip verification? You pay for it later. Peer-reviewed work shows developers using AI assistants can produce less secure code while feeling more confident about it, a perfect recipe for invisible risk.

Treat verification minutes as a real budget line, log prompts/model versions/tool calls, and make reproducibility non-negotiable. Because if you can’t replay the decision, you can’t debug it (or pass audit).

Benchmarks aimed at repo-level tasks exist for a reason: we still need engineers to stitch snippets into systems.

🔑 Summary

Here’s the frame we teach our tech team:

• Why-first framing

One paragraph: the user, the constraint, the acceptance test. If “done” isn’t defined, AI will happily generate forever.

• Prompt → pull requests (PR) (not prompt → prod)

Every AI change lands as a PR behind unit/integration tests, SAST, and secrets scanning. That’s how the 55% lab speed turns into real-world merge speed.

• Small-before-frontier

Let a smaller model draft; escalate to a frontier model only when CI says the easy path failed. Cheaper, easier to review, and it keeps humans in the loop where it matters.

• Budget the verification line item

If review time isn’t dropping by week three, you’re not seeing net productivity—no matter how magical generation feels.

• Log for reproducibility

Prompts, model version, tool calls. If you can’t replay the decision, you can’t debug (or pass audit).

What to do now for tech leaders

Treat adoption as a rhythm, not a broadcast.

A weekly, ten-minute, why-first demo on a task a senior teammate already owns does more than “raise awareness”; it builds muscle memory.

You see the workflow end-to-end in their language, in their repo, under their constraints. And because you capture two simple numbers every time – time saved and the minutes it took to verify the output – opinions give way to evidence. Three weeks of that is usually enough to tell you whether to scale or stop; it’s remarkable how much politics evaporates when everyone can point at the same two numbers.

Next, engineers should focus the training on orchestration, not party tricks. The skill you’re buying is the ability to turn intent into a safe, merged change: framing the why, constraining the solution, landing prompt to PR behind tests.

You can start by baselining real skills with small, hands-on checks instead of self-reports; then train to the gaps your own assessments (and industry research like Pluralsight’s) actually reveal. People who can take a one-paragraph problem and produce a reviewed, reproducible pull request are your force multipliers.

Once you move beyond pilots, you want price caps, version pinning, and continuity language that survives vendor shifts.

The tooling surface is moving fast. CB Insights sourced above has been clear about the pace and premium around AI so lock predictability into the paper.

Your roadmap can evolve; your cost curve shouldn’t lurch because a model or ownership changed underneath you.

And last but not least, measure work the way the business feels it.

Lines generated are vanity; time-to-merge, defect escape, and verification cost are operational truths. Time-to-merge tells you if speed is real. Defect escape keeps quality honest. Verification cost captures the hidden tax that makes “fast generation” either a win or a wash.

Track those three and you’ll know, without debate, whether AI is paying rent on your team.

So, will AI replace programmers?

No – the coding changes; the craft remains. 2025’s data says usage is up, capital is in, and skills are the choke point. The winning teams turn AI into a dependable teammate: they define why, they verify, and they ship.

The title on the card stays “Engineer.” The job is to tell a computer exactly what matters and prove it did the right thing.

“As coding becomes easier, more people should code, not fewer! One question I’m asked most often is what someone should do who is worried about job displacement by AI. My answer is: Learn about AI and take control of it, because one of the most important skills in the future will be the ability to tell a computer exactly what you want, so it can do that for you.”

— Andrew Ng, Founder of DeepLearning.AI

Conclusion

Let’s retire the doomscrolling and get back to shipping.

AI can write code; your team makes it matter. Don’t replace programmers. Instead, re-skill them into AI orchestrators.

How? By funding why-first training, keeping prompt to PR guardrails, and measuring verification cost so today’s speed becomes safely shipped value tomorrow.

👉 Want a pragmatic plan for your AI stack and team? Talk to our AI engineering experts and we’ll sketch a sensible path forward.