AI coding agents (Cursor, Lovable, Windsurf, etc.) are getting scary-good at turning intent into working code. But the smarter they get, the pricier they are to run (have you checked your AI budgets?).

Vendors are reacting with rate limits, price hikes, and usage/pass-through pricing, and many enterprises say token-metered pricing is hard to budget.

That friction stalls adoption.

CB Insights reports that “token-metered pricing is difficult to budget,” and that reasoning models “inflate output-token volume roughly 20x,” compounding run costs.

AI coding agents feel like magic, until the bill lands. If you’re hearing “tokens” more than “test coverage,” this guide is for you.

We’ll show how to keep the vibe of intent-driven coding while making costs predictable, code safe, and vendors replaceable.

What is “vibe coding”?

For developers, vibe coding is a laid-back state of flow – imagine lo-fi beats in the background, coffee within reach, dark mode glowing, and the code almost writing itself. It feels productive, even magical, too.

Vibe coding is the switch from typing line-by-line to describing what you want and steering.

Here’s how they are doing it:

You state the goal (“normalize supplier invoices and load to our warehouse”), constraints (“Postgres 15, Airflow 2, internal style guide”), and examples. The agent drafts code, runs tests, fixes errors, and iterates. You nudge it along—like pair programming with a tireless junior.

Vibe coding shines when you need momentum on small, bounded work like quick scripts, glue code, tests, refactors, and migrations – where fast iteration matters more than heavy upfront design.

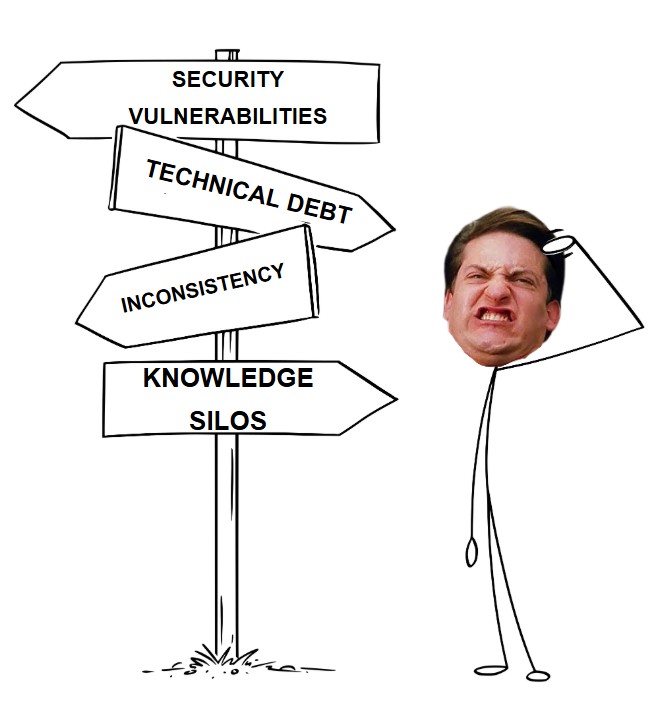

But if left unchecked, it feels productive while accumulating mess: you return the next day to code without a plan, hard to scale or hand off.

For production systems and collaborative repos, it only works with a structure with clear goals, test gates, and reviews, and it’s best kept to modules/components, not whole apps.

Finally, don’t let agents run unattended for long. Past a few rounds, errors compound, and your code’s quality decays.

What goes wrong if you ignore the cost of vibe coding

Cost whiplash in your AI budgets

In delivery, there are three dials quietly blowing up AI budgets:

- duty cycle (how long agents run),

- context length (how much code they ingest),

- and tool/retry loops (how often they build, run, and fix)

Turn those up, especially with reasoning modes and a single “fix this pipeline” request can fan out into thousands of tokens.

That’s why budgets feel slippery. And there’s a structural reason costs spike which directly compounds per-token billing.

The predictable consequence is a pricing pivot across the category: more pass-through, usage-based, and capped models to align revenue with compute.

Vendor volatility

Teams shouldn’t assume their AI vendor will look the same in six months.

AI-driven M&A is high and getting bigger. CB Insights’ State of Tech Exits shows steady volume, larger deals, and record AI values, exactly the mix that reshuffles roadmaps and resets prices.

Beyond classic acquisitions, reverse acqui-hires (aka hiring the team while licensing the tech) are gaining momentum, which can leave products in awkward limbo for customers who’ve built workflows on top of them.

In practice, that means you design for portability first (BYOM (Bring Your Own Model) options, exportable indices, API version pinning) and negotiate continuity plus price-protection up front, not after the press release.

Vibe coding: great for scripts, bad for systems

It has been discussed in tech forums and coffee talks that vibe coding feels productive today, but it ages poorly without structure.

It’s solid for small scripts, glue tasks, and spikes; it falls apart on scalable apps and team code unless you add “supervisor eye” on it with clear goals, tests, reviews, and design notes.

Our read matches that: keep the vibe, wrap it in process. Treat agents like eager juniors working behind CI gates, with prompts landing as PRs, not direct pushes.

How should tech buyers adopt AI coding agents with efficient AI budgets?

Start with one bounded workflow, and give it 30 days

When development teams ask where to begin, we often tell them to pick something boring and steady. Not a moonshot, not a hero refactor. Think:

- “generate and maintain tests for one service,”

- “fix or scaffold a handful of Airflow DAGs,”

- “spin up CRUD endpoints from an OpenAPI spec,”

- or “migrate a family of SQL queries with saner plans.”

Then make success measurable before you write the first prompt: a small gold test set (inputs and expected outputs), a baseline of how long these tasks take today, and the simple stats that matter tomorrow – like P50/P95 task time, how many human edits per PR, and how many defects your CI catches.

With this, you’ll discover very quickly whether the agent is genuinely accelerating work or just creating the illusion of progress.

Pick the right model for the job then blend

We love frontier models for the moments that actually require reasoning. They are big, cross-repo refactors, tricky planning, “figure this out across five tangled modules.”

But, sadly, most day-to-day factory IT isn’t that.

Deterministic scaffolding, code transforms, doc extraction, conventional CRUD… sing on small models with retrieval, especially when you keep context local and tests tight.

Our default pattern is simple: let a small, often on-prem model draft; run CI; only escalate failures to a hosted frontier model. It preserves the vibe of moving fast while keeping your costs and risk under control.

Make costs predictable or don’t ship

Token pricing looks fair on paper and works fine for spiky experiments. The trouble starts when agents run every day, in the background, for the same classes of jobs. That’s when “just one more tool call” turns a cheap task into a surprise bill.

The fix isn’t magic, but much simpler: it’s contract and architecture.

Push for seat-based access paired with a pooled compute allowance you can cap and alert on; or buy per-workflow bundles with clear ceilings; or run inference in your own cloud and pay the vendor for software, not tokens.

Those models map revenue to compute in a way finance can live with and they reflect where the market is drifting as vendors react to buyer pushback on budgeting token-metered usage.

Under the hood, we watch three dials like a hawk: duty cycle (how continuously agents run), context length (how much you stuff into each request), and tool/retry loops (how often we lint, build, execute, and do-overs).

If a vendor can’t show you usage curves and hard caps for those three, the pricing isn’t enterprise-ready, no matter how friendly the UI looks.

Design for portability on day one

Even if this post is about cost and delivery, we can’t pretend the market is static.

AI dealmaking is lively, and AI-driven acquisitions are a larger share of tech M&A than they used to be. That’s great for headlines and often disruptive for customers.

The antidote is portability: insist on open model support or BYO model, keep your embeddings and indices exportable, pin API versions with reasonable deprecation windows, and write continuity and price-protection into the contract so an ownership change doesn’t become your rebuild. The trend line justifies the paranoia.

Keep the vibe, wrap it in engineering

The way we keep the good and lose the bad is boring but effective: prompts land as PRs with tests, not direct pushes; anything that touches production gets a one-pager up front (goal, constraints, acceptance checks); CI gates stay on (unit and integration tests, SAST, secrets, licenses); and we own our telemetry so we can reproduce who asked an agent to do what, when, and with which model.

That’s how you avoid the next-day hangover where yesterday’s magic turns into today’s rework.

Go on-prem when the math and the mandate say so.

If the workload is steady and the data is sensitive, on-prem inference often beats the cloud on both cost and control, especially once you factor egress and managed premiums. But it’s not a free lunch. You need the basics: patch cadence, backups, observability, and a team that knows their way around GPUs and storage paths.

For bursty traffic or thin ops benches, keep it simple and stay hosted; for predictable duty cycles and clear compliance needs, repatriation pencils out more often than people think.

The through-line in all of this is simple: start small with close eye watching, match the model to the job, make the AI budgets boring, and keep your exits open.

Do that, and the “vibe” becomes a force multiplier instead of a mess to clean up later.

Faster merges, lower spend, fewer surprises

When this lands well, the day feels different.

Before, our engineers were hand-crafting connectors and chasing flaky tests, refactors were the thing everyone agreed were “important next quarter.”

The agent trials kept getting paused because a single “quick fix” quietly fanned out into a thousand tokens.

Finance couldn’t forecast.

Platform was fielding late-night pings about overages. We were moving fast in the moment and paying for it later.

After a disciplined pilot, everything changes. The agent handles the scaffolding and the test grind, pull requests arrive with checks already green.

Now, developers spend their brainpower on design choices and edge-case handling, not boilerplate.

The cost dashboard is written with right purpose: seats are fixed, the compute pool has hard caps, and month-end lands within the range we agreed with AI budgets instead of as a surprise.

No one is sneaking personal accounts anymore because the official path is faster and safer.

You’ll see it in the engineering signals, too.

Time-to-merge shrinks without the review quality falling off a cliff. Defect escape rate heads in the right direction because tests aren’t an afterthought. Change failure rate drops because every agent change is a PR behind CI, not a vibe-driven push. On-call is quieter. Secrets scanning, SAST, and license checks run in the background and stay out of the headlines.

The human test is also simple: sprint review conversations stop being about the model we tuned and start being about the business problem we shipped.

A new hire can open the repo, read the prompt log and the PR history, and pick up where the agent left off without a tour guide that wrecks a lot of brain nerves. And when your CFO asks, “Are we on budget?” the answer is a calm Yes.

Leaving the table to you

Letting AI coding agents onboard isn’t the win; keeping them on AI budgets, on process, and on spec is.

The way we do it is boring but always effective: start with one steady workflow, measure against a gold test set, draft on a small model and escalate only when CI says so, and price with caps that finance can predict.

👉 Want a co-run with our team to have a 30-minute TCO readout on your tech infrastructure or simply just review a target workflow you’re not sure? Contact our AI experts for a free consultation.